New Publication in the Journal “Fire” by Moritz Rösch on “Data-Driven Wildfire Spread Modeling of European Wildfires Using a Spatiotemporal Graph Neural Network” together with colleagues from the German Aerospace Center (DLR).

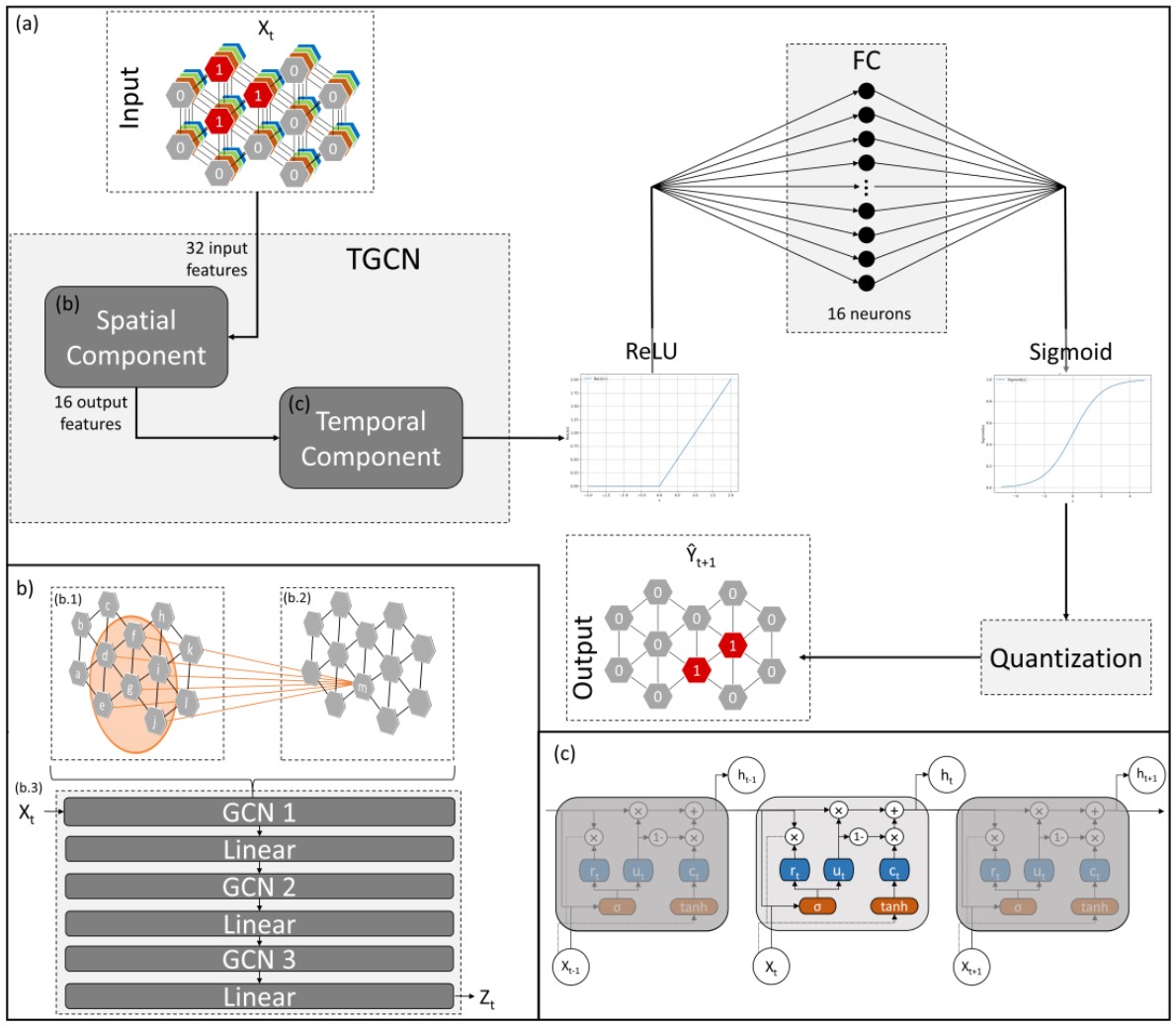

From the abstract: Wildfire spread models are an essential tool for mitigating catastrophic effects associated with wildfires. However, current operational models suffer from significant limitations regarding accuracy and transferability. Recent advances in the availability and capability of Earth observation data and artificial intelligence offer new perspectives for data-driven modeling approaches with the potential to overcome the existing limitations. Therefore, this study developed a data-driven Deep Learning wildfire spread modeling approach based on a comprehensive dataset of European wildfires and a Spatiotemporal Graph Neural Network, which was applied to this modeling problem for the first time. A country-scale model was developed on an individual wildfire time series in Portugal while a second continental-scale model was developed with wildfires from the entire Mediterranean region. While neither model was able to predict the daily spread of European wildfires with sufficient accuracy (weighted macro-mean IoU: Portugal model 0.37; Mediterranean model 0.36), the continental model was able to learn the generalized patterns of wildfire spread, achieving similar performances in various fire-prone Mediterranean countries, indicating an increased capacity in terms of transferability. Furthermore, we found that the spatial and temporal dimensions of wildfires significantly influence model performance. Inadequate reference data quality most likely contributed to the low overall performances, highlighting the current limitations of data-driven wildfire spread models